Researchers develop touchscreens with tactile feedback

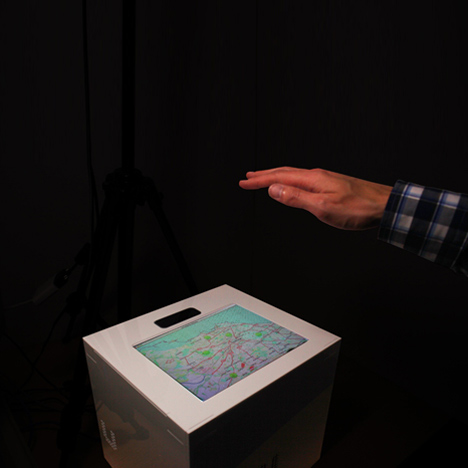

News: researchers at Bristol University in the UK have developed a way for users to get tactile feedback from touchscreens while controlling them with gestures in mid-air.

The UltraHaptics setup transmits ultrasound impulses through the screen to exert a force on a specific point above it that's strong enough for the user to feel with their hands.

"The use of ultrasonic vibrations is a new technique for delivering tactile sensations to the user," explained the team. "A series of ultrasonic transducers emit very high frequency sound waves. When all of the sound waves meet at the same location at the same time, they create sensations on a human's skin."

The Bristol University team wanted to take the intuitive, hands-on nature of touchscreens and add the haptic feedback associated with analogue controls like buttons and switches, which they felt was lacking from flat glass interfaces.

"Current systems with integrated interactive surfaces allow users to walk-up and use them with bare hands," said Tom Carter, a PhD student working on the project. "Our goal was to integrate haptic feedback into these systems without sacrificing their simplicity and accessibility."

Varying the frequency of vibrations targeted to specific points makes them feel different to each other, adding an extra layer of information over the screen.

One application of this, demonstrated in the movie, shows a map where the user can feel the air above the screen to determine the population density in different areas of a city - a higher density is represented by stronger vibrations.

In addition to gaining haptic feedback, the user's hand gestures can be tracked using a Leap Motion sensor to control what's on the screen, rather than touching the screen itself.

The example given in the movie shows the controls of a music player operated by tapping the air above the play button and pinching the air above the volume slider. The ultrasound waves directed at these invisible control points in the air pulse when operated to let the user feel they are engaging with them, so they can operate the system without looking.

Carter presented the project at the ACM Symposium on User Interface Software and Technology at the University of St Andrews in Scotland today.

Last month we reported on gesture-controlled software for designing 3D-printed rocket parts and we've also showed a transparent computer that allows users to reach "inside" the screen and manipulate content with their hands.