Google's Project Soli turns hand gestures into digital controls using radar

Google has unveiled an interaction sensor that uses radar to translate subtle hand movements into gesture controls for electronic devices, with the potential to transform the way they're designed (+ movie).

Project Soli was one of the developments revealed by Google's Advanced Technology and Progress (ATAP) group during the company's I/O developer conference in San Francisco last week.

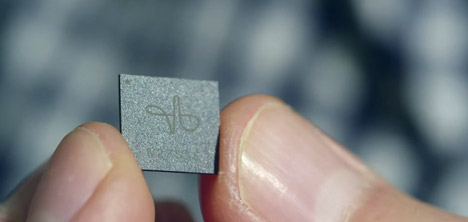

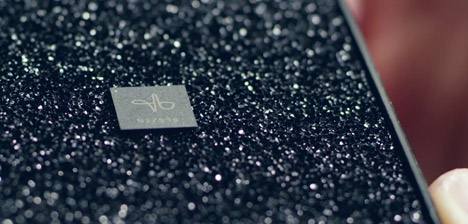

The team has created a tiny sensor that fits onto a chip. The sensor is able to track sub-millimetre hand gestures at high speed and accuracy with radar, and use them to control electronic devices without physical contact. This could remove the need for designing knobs and buttons into the surface of products like watches, phones and radios, and even medical equipment.

"Capturing the possibilities of the human hands was one of my passions," said Project Soli founder Ivan Poupyrev. "How could we take this incredible capability – the finesse of the human actions and using our hands – but apply it to the virtual world?"

Waves in the radio frequency spectrum are emitted at a target by the chip. The panel then receives the reflected waves, which are transferred to a computer circuit that interprets the differences between them.

Even subtle changes detected in the returning waves can be translated into commands for an electronic device.

"Radar has been used for many different things: tracking cars, big objects, satellites and planes," said Poupyrev. "We're using them to track micro motions; twitches of humans hands then use it to interact with wearables and integrated things in other computer devices."

The team is able to extract information from the data received and identify the intent of the user by comparing the signals to a database of stored gestures. These include movements that mimic the use of volume knobs, sliders and buttons, creating a set of "virtual tools".

"Our team is focused on taking radar hardware and turning it into a gesture sensor," said Jaime Lien, lead research engineer on the project. "The reason why we're able to interpret so much from this one radar signal is because of the full gesture-recognition pipeline that we've built."

Compared to cameras, radar has very high positional accuracy and so can sense tiny motions. Radar can also work through other materials, meaning the chips can be embedded within objects and still pick up the gestures.

The gestures chosen by the team were selected for their similarity to standard actions we perform every day. For example, swiping across the side of a closed index finger with a thumb could be used to scroll across a flat plane, while tapping a finger and thumb together would press a button.

Google's ATAP department is already testing hardware applications for the technology, including controls for digital radios and smartwatches. The chips can be produced in large batches and built into devices and objects.