Research company OpenAI has developed a programme that can turn simple text instructions into high-quality images.

Named DALL-E 2, the programme uses artificial intelligence (AI) to create realistic images or artworks from a text description written in natural language.

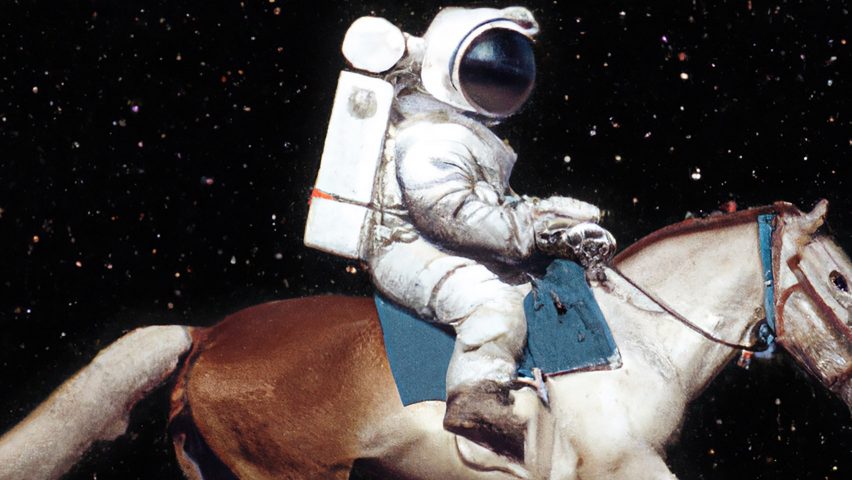

The descriptions can be quite complex, incorporating actions, art styles and multiple subjects. Some of the examples on OpenAI's blog include "an astronaut lounging in a tropical resort in space in a vaporwave style" and "teddy bears working on new AI research underwater with 1990s technology".

DALL-E 2 builds on OpenAI's previous tool, DALL-E, which launched in January 2021. The new iteration produces more astonishing results, thanks to higher-resolution imagery, greater textual comprehension, faster processing and some new capabilities.

Named after the Pixar robot WALL-E and the artist Salvador Dalí, DALL-E is a type of neural network – a computing system loosely modelled on the connected neurons in a biological brain.

The neural network has been trained on images and their text descriptions to understand the relationship between objects.

"Through deep learning it not only understands individual objects like koala bears and motorcycles but learns from relationships between objects," said OpenAI.

"And when you ask DALL-E for an image of a koala bear riding a motorcycle, it knows how to create that or anything else with a relationship to another object or action."

DALL-E 2 provides several image alternatives for each text prompt. An additional capability added with DALL-E 2 is to use the same natural language descriptions to edit and retouch existing photos.

This feature, which OpenAI calls "in-painting", works like a more sophisticated version of Photoshop's content-aware fill, realistically adding or removing elements from a selected section of the image while taking into account shadows, reflections and textures.

For instance, the examples on the OpenAI blog show a sofa added to various spots in a photograph of an empty room.

OpenAI says that the DALL-E project not only allows people to express themselves visually but also helps researchers understand how advanced AI systems see and understand our world.

"This is a critical part of developing AI that's useful and safe," said OpenAI.

Originally founded as a non-profit by high-profile technology figures including Elon Musk, OpenAI is dedicated to developing AI for long-term positive human impact and curbing its potential dangers.

To that end, DALL-E 2 is not currently being made available to the public. OpenAI identifies the application could be dangerous if it were used to create deceptive content, similar to current "deepfakes", or otherwise harmful imagery.

It also recognises that AI inherits biases from its training and so can end up reinforcing social stereotypes.

While OpenAI refines its safety measures, DALL-E is only shared with a select few users for testing. Already, there is a content policy barring users from making any violent or hate imagery, as well as anything "not G-rated" or any political content.

This is enforced by filters and both automated and human monitoring systems.

DALL-E's ability to generate such images in the first place would be limited. All explicit or violent content was removed from its training data, so it has had blissfully little exposure to these concepts.

OpenAI was started by Musk, Y Combinator's Sam Altman and other backers in late 2015, although Musk has since resigned from the board. In 2019 it transitioned to being a for-profit company, apparently to secure more funding, although its parent company remains a non-profit.

One of OpenAI's other projects is Dactyl, which involved training a robot hand to nimbly manipulate objects using human-like movements it taught itself.